VPP Linux CP - Part6

About this series

Ever since I first saw VPP - the Vector Packet Processor - I have been deeply impressed with its performance and versatility. For those of us who have used Cisco IOS/XR devices, like the classic ASR (aggregation services router), VPP will look and feel quite familiar as many of the approaches are shared between the two. One thing notably missing, is the higher level control plane, that is to say: there is no OSPF or ISIS, BGP, LDP and the like. This series of posts details my work on a VPP plugin which is called the Linux Control Plane, or LCP for short, which creates Linux network devices that mirror their VPP dataplane counterpart. IPv4 and IPv6 traffic, and associated protocols like ARP and IPv6 Neighbor Discovery can now be handled by Linux, while the heavy lifting of packet forwarding is done by the VPP dataplane. Or, said another way: this plugin will allow Linux to use VPP as a software ASIC for fast forwarding, filtering, NAT, and so on, while keeping control of the interface state (links, addresses and routes) itself. When the plugin is completed, running software like FRR or Bird on top of VPP and achieving >100Mpps and >100Gbps forwarding rates will be well in reach!

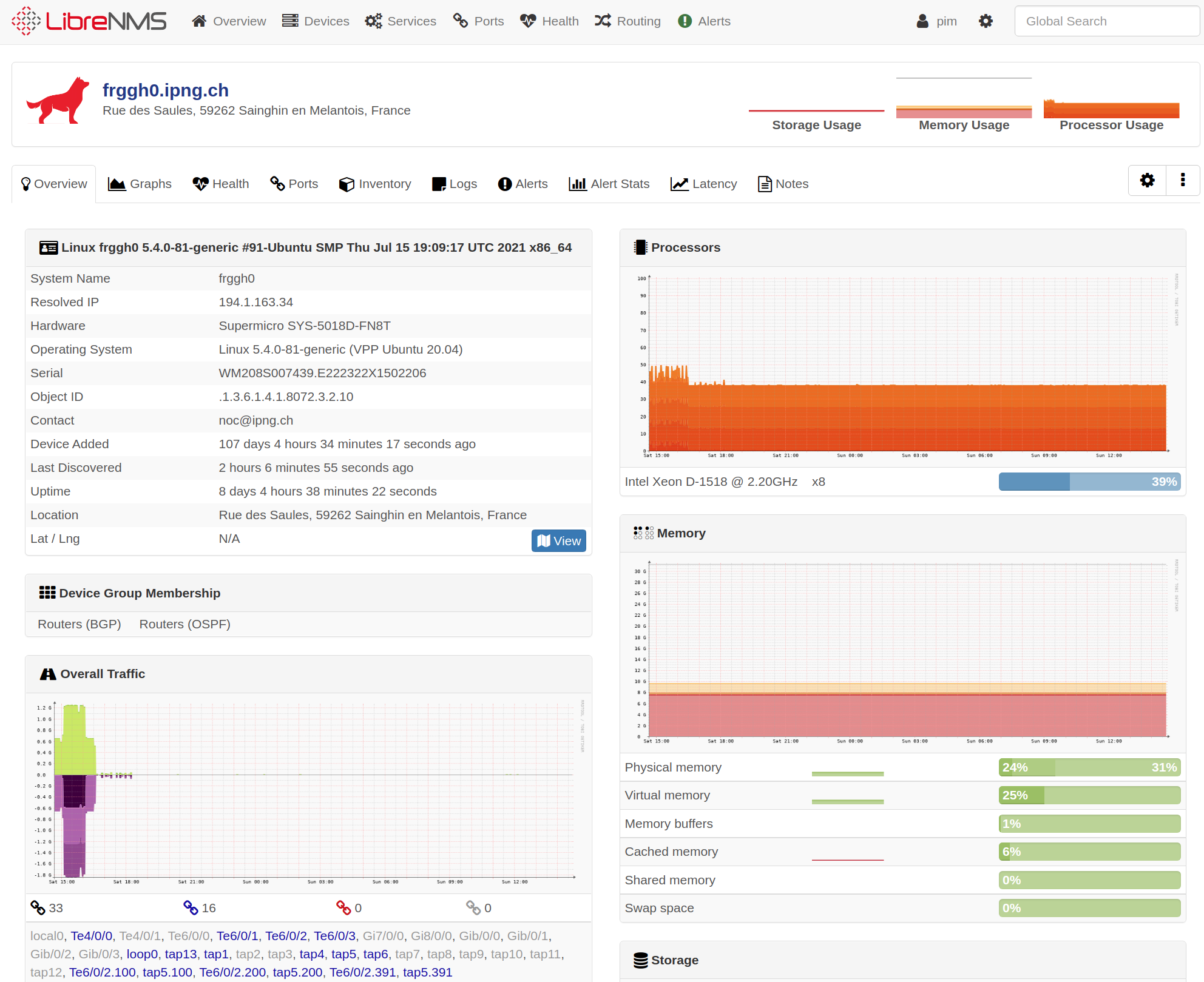

SNMP in VPP

Now that the Interface Mirror and Netlink Listener plugins are in good shape, this post shows a few finishing touches. First off, although the native habitat of VPP is Prometheus, many folks still run classic network monitoring systems like the popular Obvervium or its sibling LibreNMS. Although the metrics-based approach is modern, we really ought to have an old-skool SNMP interface so that we can swear it by the Old Gods and the New.

VPP’s Stats Segment

VPP maintains lots of interesting statistics at runtime - for example for nodes and their activity,

but also, importantly, for each interface known to the system. So I take a look at the stats segment,

configured in startup.conf, and I notice that VPP will create socket in /run/vpp/stats.sock which

can be connected to. There’s also a few introspection tools, notably vpp_get_stats, which can either

list, dump once, or continuously dump the data:

pim@hippo:~$ vpp_get_stats socket-name /run/vpp/stats.sock ls | wc -l

3800

pim@hippo:~$ vpp_get_stats socket-name /run/vpp/stats.sock dump /if/names

[0]: local0 /if/names

[1]: TenGigabitEthernet3/0/0 /if/names

[2]: TenGigabitEthernet3/0/1 /if/names

[3]: TenGigabitEthernet3/0/2 /if/names

[4]: TenGigabitEthernet3/0/3 /if/names

[5]: GigabitEthernet5/0/0 /if/names

[6]: GigabitEthernet5/0/1 /if/names

[7]: GigabitEthernet5/0/2 /if/names

[8]: GigabitEthernet5/0/3 /if/names

[9]: TwentyFiveGigabitEthernet11/0/0 /if/names

[10]: TwentyFiveGigabitEthernet11/0/1 /if/names

[11]: tap2 /if/names

[12]: TenGigabitEthernet3/0/1.1 /if/names

[13]: tap2.1 /if/names

[14]: TenGigabitEthernet3/0/1.2 /if/names

[15]: tap2.2 /if/names

[16]: TenGigabitEthernet3/0/1.3 /if/names

[17]: tap2.3 /if/names

[18]: tap3 /if/names

[19]: tap4 /if/names

Alright! Clearly, the /if/ prefix is the one I’m looking for. I find a Python library that allows

for this data to be MMAPd and directly read as a dictionary, including some neat aggregation

functions (see src/vpp-api/python/vpp_papi/vpp_stats.py):

Counters can be accessed in either dimension.

stat['/if/rx'] - returns 2D lists

stat['/if/rx'][0] - returns counters for all interfaces for thread 0

stat['/if/rx'][0][1] - returns counter for interface 1 on thread 0

stat['/if/rx'][0][1]['packets'] - returns the packet counter

for interface 1 on thread 0

stat['/if/rx'][:, 1] - returns the counters for interface 1 on all threads

stat['/if/rx'][:, 1].packets() - returns the packet counters for

interface 1 on all threads

stat['/if/rx'][:, 1].sum_packets() - returns the sum of packet counters for

interface 1 on all threads

stat['/if/rx-miss'][:, 1].sum() - returns the sum of packet counters for

interface 1 on all threads for simple counters

Alright, so let’s grab that file and refactor it into a small library for me to use, I do this in [this commit].

VPP’s API

In a previous project, I already got a little bit of exposure to the Python API (vpp_papi),

and it’s pretty straight forward to use. Each API is published in a JSON file in

/usr/share/vpp/api/{core,plugins}/ and those can be read by the Python library and

exposed to callers. This gives me full programmatic read/write access to the VPP runtime

configuration, which is super cool.

There are dozens of APIs to call (the Linux CP plugin even added one!), and in the case

of enumerating interfaces, we can see the definition in core/interface.api.json where

there is an element called services.sw_interface_dump which shows its reply is

sw_interface_details, and in that message we can see all the fields that will be

set in the request and all that will be present in the response. Nice! Here’s a quick

demonstration:

from vpp_papi import VPPApiClient

import os

import fnmatch

import sys

vpp_json_dir = '/usr/share/vpp/api/'

# construct a list of all the json api files

jsonfiles = []

for root, dirnames, filenames in os.walk(vpp_json_dir):

for filename in fnmatch.filter(filenames, '*.api.json'):

jsonfiles.append(os.path.join(root, filename))

vpp = VPPApiClient(apifiles=jsonfiles, server_address='/run/vpp/api.sock')

vpp.connect("test-client")

v = vpp.api.show_version()

print('VPP version is %s' % v.version)

iface_list = vpp.api.sw_interface_dump()

for iface in iface_list:

print("idx=%d name=%s mac=%s mtu=%d flags=%d" % (iface.sw_if_index,

iface.interface_name, iface.l2_address, iface.mtu[0], iface.flags))

The output:

$ python3 vppapi-test.py

VPP version is 21.10-rc0~325-g4976c3b72

idx=0 name=local0 mac=00:00:00:00:00:00 mtu=0 flags=0

idx=1 name=TenGigabitEthernet3/0/0 mac=68:05:ca:32:46:14 mtu=9000 flags=0

idx=2 name=TenGigabitEthernet3/0/1 mac=68:05:ca:32:46:15 mtu=1500 flags=3

idx=3 name=TenGigabitEthernet3/0/2 mac=68:05:ca:32:46:16 mtu=9000 flags=1

idx=4 name=TenGigabitEthernet3/0/3 mac=68:05:ca:32:46:17 mtu=9000 flags=1

idx=5 name=GigabitEthernet5/0/0 mac=a0:36:9f:c8:a0:54 mtu=9000 flags=0

idx=6 name=GigabitEthernet5/0/1 mac=a0:36:9f:c8:a0:55 mtu=9000 flags=0

idx=7 name=GigabitEthernet5/0/2 mac=a0:36:9f:c8:a0:56 mtu=9000 flags=0

idx=8 name=GigabitEthernet5/0/3 mac=a0:36:9f:c8:a0:57 mtu=9000 flags=0

idx=9 name=TwentyFiveGigabitEthernet11/0/0 mac=6c:b3:11:20:e0:c4 mtu=9000 flags=0

idx=10 name=TwentyFiveGigabitEthernet11/0/1 mac=6c:b3:11:20:e0:c6 mtu=9000 flags=0

idx=11 name=tap2 mac=02:fe:07:ae:31:c3 mtu=1500 flags=3

idx=12 name=TenGigabitEthernet3/0/1.1 mac=00:00:00:00:00:00 mtu=1500 flags=3

idx=13 name=tap2.1 mac=00:00:00:00:00:00 mtu=1500 flags=3

idx=14 name=TenGigabitEthernet3/0/1.2 mac=00:00:00:00:00:00 mtu=1500 flags=3

idx=15 name=tap2.2 mac=00:00:00:00:00:00 mtu=1500 flags=3

idx=16 name=TenGigabitEthernet3/0/1.3 mac=00:00:00:00:00:00 mtu=1500 flags=3

idx=17 name=tap2.3 mac=00:00:00:00:00:00 mtu=1500 flags=3

idx=18 name=tap3 mac=02:fe:95:db:3f:c4 mtu=9000 flags=3

idx=19 name=tap4 mac=02:fe:17:06:fc:af mtu=9000 flags=3

So I added a little abstration with some error handling and one main function

to return interfaces as a Python dictionary of those sw_interface_details

tuples in [this commit].

AgentX

Now that we are able to enumerate the interfaces and their metadata (like admin/oper status, link speed, name, index, MAC address, and what have you), and as well the highly sought after interface statistics as 64bit counters (with a wealth of extra information like broadcast/multicast/unicast packets, octets received and transmitted, errors and drops). I am ready to tie things together.

It took a bit of sleuthing, but I eventually found a library on sourceforge (!) that has a rudimentary implementation of RFC 2741 which is the SNMP Agent Extensibility (AgentX) Protocol. In a nutshell, this allows an external program to connect to the main SNMP daemon, register an interest in certain OIDs, and get called whenever the SNMPd is being queried for them.

The flow is pretty simple (see section 6.2 of the RFC), the Agent (client):

- opens a TCP or Unix domain socket to the SNMPd

- sends an Open PDU, which the server will respond or reject.

- (optionally) can send a Ping PDU, the server will respond.

- registers an interest with Register PDU

It then waits and gets called by the SNMPd with Get PDUs (to retrieve one single value), GetNext PDU (to enable snmpwalk), GetBulk PDU (to retrieve a whole subsection of the MIB), all of which are answered by a Response PDU.

If the Agent is to support writing, it will also have to implement TestSet, CommitSet, CommitUndoSet and CommitCleanupSet PDUs. For this agent, we don’t need to implement those, so I’ll just ignore those requests and implement the read-only stuff. Sounds easy :)

The first order of business is to create the values for two main MIBs of interest:

.iso.org.dod.internet.mgmt.mib-2.interfaces.ifTable.- This table is an older variant and it contains a bunch of relevant fields, one per interface, notablyifIndex,ifName,ifType,ifMtu,ifSpeed,ifPhysAddress,ifOperStatus,ifAdminStatusand a bunch of 32bit counters for octets/packets in and out of the interfaces..iso.org.dod.internet.mgmt.mib-2.ifMIB.ifMIBObjects.ifXTable.- This table is a makeover of the other one (the X here stands for eXtra), and adds a few 64 bit counters for the interface stats, and as well anifHighSpeedwhich is in megabits instead of kilobits in the previous MIB.

Populating these MIBs can be done periodically by retrieving the interfaces from VPP and

then simply walking the dictionary with Stats Segment data. I then register these two

main MIB entrypoints with SNMPd as I connect to it, and spit out the correct values

once asked with GetPDU or GetNextPDU requests, by issuing a corresponding ResponsePDU

to the SNMP server – it takes care of all the rest!

The resulting code is in [this commit] but you can also check out the whole thing on [Github].

Building

Shipping a bunch of Python files around is not ideal, so I decide to build this stuff

together in a binary that I can easily distribute to my machines: I just simply install

pyinstaller with PIP and run it:

sudo pip install pyinstaller

pyinstaller vpp-snmp-agent.py --onefile

## Run it on console

dist/vpp-snmp-agent -h

usage: vpp-snmp-agent [-h] [-a ADDRESS] [-p PERIOD] [-d]

optional arguments:

-h, --help show this help message and exit

-a ADDRESS Location of the SNMPd agent (unix-path or host:port), default localhost:705

-p PERIOD Period to poll VPP, default 30 (seconds)

-d Enable debug, default False

## Install

sudo cp dist/vpp-snmp-agent /usr/sbin/

Running

After installing Net-SNMP, the default in Ubuntu, I do have to ensure that it runs in

the correct namespace. So what I do is disable the systemd unit that ships with the Ubuntu

package, and instead create these:

pim@hippo:~/src/vpp-snmp-agentx$ cat < EOF | sudo tee /usr/lib/systemd/system/netns-dataplane.service

[Unit]

Description=Dataplane network namespace

After=systemd-sysctl.service network-pre.target

Before=network.target network-online.target

[Service]

Type=oneshot

RemainAfterExit=yes

# PrivateNetwork will create network namespace which can be

# used in JoinsNamespaceOf=.

PrivateNetwork=yes

# To set `ip netns` name for this namespace, we create a second namespace

# with required name, unmount it, and then bind our PrivateNetwork

# namespace to it. After this we can use our PrivateNetwork as a named

# namespace in `ip netns` commands.

ExecStartPre=-/usr/bin/echo "Creating dataplane network namespace"

ExecStart=-/usr/sbin/ip netns delete dataplane

ExecStart=-/usr/bin/mkdir -p /etc/netns/dataplane

ExecStart=-/usr/bin/touch /etc/netns/dataplane/resolv.conf

ExecStart=-/usr/sbin/ip netns add dataplane

ExecStart=-/usr/bin/umount /var/run/netns/dataplane

ExecStart=-/usr/bin/mount --bind /proc/self/ns/net /var/run/netns/dataplane

# Apply default sysctl for dataplane namespace

ExecStart=-/usr/sbin/ip netns exec dataplane /usr/lib/systemd/systemd-sysctl

ExecStop=-/usr/sbin/ip netns delete dataplane

[Install]

WantedBy=multi-user.target

WantedBy=network-online.target

EOF

pim@hippo:~/src/vpp-snmp-agentx$ cat < EOF | sudo tee /usr/lib/systemd/system/snmpd-dataplane.service

[Unit]

Description=Simple Network Management Protocol (SNMP) Daemon.

After=network.target

ConditionPathExists=/etc/snmp/snmpd.conf

[Service]

Type=simple

ExecStartPre=/bin/mkdir -p /var/run/agentx-dataplane/

NetworkNamespacePath=/var/run/netns/dataplane

ExecStart=/usr/sbin/snmpd -LOw -u Debian-snmp -g vpp -I -smux,mteTrigger,mteTriggerConf -f -p /run/snmpd-dataplane.pid

ExecReload=/bin/kill -HUP \$MAINPID

[Install]

WantedBy=multi-user.target

EOF

pim@hippo:~/src/vpp-snmp-agentx$ cat < EOF | sudo tee /usr/lib/systemd/system/vpp-snmp-agent.service

[Unit]

Description=SNMP AgentX Daemon for VPP dataplane statistics

After=network.target

ConditionPathExists=/etc/snmp/snmpd.conf

[Service]

Type=simple

NetworkNamespacePath=/var/run/netns/dataplane

ExecStart=/usr/sbin/vpp-snmp-agent

Group=vpp

ExecReload=/bin/kill -HUP \$MAINPID

Restart=on-failure

RestartSec=5s

[Install]

WantedBy=multi-user.target

EOF

Note the use of NetworkNamespacePath here – this ensures that the snmpd and its agent both

run in the dataplane namespace which was created by netns-dataplane.service.

Results

I now install the binary and, using the snmpd.conf configuration file (see Appendix):

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl stop snmpd

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl disable snmpd

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl daemon-reload

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl enable netns-dataplane

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl start netns-dataplane

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl enable snmpd-dataplane

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl start snmpd-dataplane

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl enable vpp-snmp-agent

pim@hippo:~/src/vpp-snmp-agentx$ sudo systemctl start vpp-snmp-agent

pim@hippo:~/src/vpp-snmp-agentx$ sudo journalctl -u vpp-snmp-agent

[INFO ] agentx.agent - run : Calling setup

[INFO ] agentx.agent - setup : Connecting to VPP Stats...

[INFO ] agentx.vppapi - connect : Connecting to VPP

[INFO ] agentx.vppapi - connect : VPP version is 21.10-rc0~325-g4976c3b72

[INFO ] agentx.agent - run : Initial update

[INFO ] agentx.network - update : Setting initial serving dataset (740 OIDs)

[INFO ] agentx.agent - run : Opening AgentX connection

[INFO ] agentx.network - connect : Connecting to localhost:705

[INFO ] agentx.network - start : Registering: 1.3.6.1.2.1.2.2.1

[INFO ] agentx.network - start : Registering: 1.3.6.1.2.1.31.1.1.1

[INFO ] agentx.network - update : Replacing serving dataset (740 OIDs)

[INFO ] agentx.network - update : Replacing serving dataset (740 OIDs)

[INFO ] agentx.network - update : Replacing serving dataset (740 OIDs)

[INFO ] agentx.network - update : Replacing serving dataset (740 OIDs)

Appendix

SNMPd Config

$ cat << EOF | sudo tee /etc/snmp/snmpd.conf

com2sec readonly default <<some-string>>

group MyROGroup v2c readonly

view all included .1 80

access MyROGroup "" any noauth exact all none none

sysLocation Ruemlang, Zurich, Switzerland

sysContact noc@ipng.ch

master agentx

agentXSocket tcp:localhost:705,unix:/var/agentx/master,unix:/run/vpp/agentx.sock

agentaddress udp:161,udp6:161

#OS Distribution Detection

extend distro /usr/bin/distro

#Hardware Detection

extend manufacturer '/bin/cat /sys/devices/virtual/dmi/id/sys_vendor'

extend hardware '/bin/cat /sys/devices/virtual/dmi/id/product_name'

extend serial '/bin/cat /var/run/snmpd.serial'

EOF

Note the use of a few helpers here - /usr/bin/distro comes from LibreNMS ref

and tries to figure out what distribution is used. The very last line of that file

echo’s the found distribtion, to which I prepend the string, like echo "VPP ${OSSTR}".

The other file of interest /var/run/snmpd.serial is computed at boot-time, by running

the following in /etc/rc.local:

# Assemble serial number for snmpd

BS=$(cat /sys/devices/virtual/dmi/id/board_serial)

PS=$(cat /sys/devices/virtual/dmi/id/product_serial)

echo $BS.$PS > /var/run/snmpd.serial

I have to do this, because SNMPd runs as non-privileged user, yet those DMI elements are

root-readable only (for reasons that are beyond me). Seeing as they will not change at

runtime anyway, I just create that file and cat it into the serial field. It then shows

up nicely in LibreNMS alongside the others.

Oh, and one last thing. The VPP Hound logo!

In LibreNMS, the icons in the devices view use a function that leveages this distro

field, by looking at the first word (in our case “VPP”) with an extension of either .svg

or .png in an icons directory, usually html/images/os/. I dropped the hound of the

fd.io homepage in there, and will add the icon upstream for future use,

in this [librenms PR] and its companion

change to [librenms-agent PR.